As a manufacturer, you need to track downtime data to identify inefficiencies, plan for maintenance, and drive continuous improvement. Doing this by leveraging automated downtime tracking data has proven to provide one of the highest returns-on-investment (ROI) compared to similar data-driven solutions. For example, a downtime tracking solution we implemented for one manufacturer allowed them to postpone building a new $60 million facility since the increased efficiency at their current facility resulted in more cases produced per day.

While you likely have a system in place to track downtime, are you confident in the accuracy of your downtime data? Even small inaccuracies can distort your understanding of your line’s performance, resulting in wasted troubleshooting efforts, misleading overall equipment effectiveness (OEE) metrics, and missed opportunities for improvement. At Polytron, we often find that while most facilities know the importance of tracking downtime, few realize how easily errors can creep in, even with automated systems in place.

The Hidden Gaps in Downtime Tracking

While it’s well known that manual data entry is prone to error as operators may forget to log an event, enter incorrect timestamps, or unintentionally misclassify downtime causes, automated data collection isn’t immune to inaccuracies either. But how do you know if your downtime data is inaccurate or not? Here are some questions to ask yourself and indicators to look for:

- Are your plant systems synchronized? If your PLC, OPC server, and manufacturing execution system (MES), for example, aren’t synchronized, start and end times can be skewed when an event is recorded, throwing off duration calculations and masking true root causes of issues.

- Are most of your downtime events categorized as “unknown” or “unspecified?” When too many downtime events are logged in these catch-all categories, it usually means the system doesn’t have clear definitions or the logic can’t pinpoint the cause. This is a big red flag that your data lacks structure and context.

- Do your operators frequently perform manual overrides or operator “corrections?” When operators need to adjust downtime reasons or durations, it suggests your automated system isn’t accurately detecting or categorizing events.

- Is your maintenance team performing excessive preventive maintenance or reactive work? When maintenance teams make equipment repairs far more often than downtime reports indicate, that’s a clue that unrecorded or misclassified downtime is occurring.

- Does it seem like you’re having too few, or too many, downtime events? If a line rarely shows downtime, or one logs hundreds of micro-stops per shift, downtime probably isn’t being measured correctly. Both extremes can point to poor event logic or system misconfiguration.

- Do your operators or managers seem like they don’t trust the data? If phrases such as “that’s not what really happened,” are often used, that’s a cultural sign your systems aren’t producing credible insights, often due to inaccurate or incomplete capture.

It’s also important to note that an overarching common source of downtime data errors often stems from the fact that manufacturers try to implement complex downtime logic directly in the PLC. Because PLCs are designed for real-time, sequential processing, they can’t “look back” at prior events to understand causal relationships. For example, a line might stop at a bottleneck machine because of an upstream or downstream fault, but without the right logic and synchronization, that downtime may be incorrectly attributed.

Why Precise Downtime Data Matters

Downtime tracking isn’t just about logging events; it’s about generating actionable intelligence. Without accurate data, you’re left with a distorted view of line performance. Unreliable data also undermines trust across teams. Operators may feel blamed for downtime they didn’t cause, while maintenance and management chase issues that aren’t really issues. In short, inaccurate information can waste valuable resources and cloud decision-making.

Building an Accurate Picture of Downtime

To capture true, actionable downtime data, we recommend developing a layered approach that leverages both PLC and MES capabilities. Basic functions, such as determining equipment state and identifying which fault occurred first should reside in the PLC. Higher-level logic such as analyzing line-level downtime or identifying causal relationships between machines belongs in a robust, industry-leading MES platform such as Parsec’s TrakSYS.

At Polytron we have seen firsthand how an MES tool like this can help deliver measurable results by supporting the following:

- Modeling interdependencies between equipment accurately

- Considering cycle time differences and asynchronous equipment operation

- Accounting for buffers or accumulations across the line

- Achieving a more complete and accurate picture of performance

Just as importantly, an MES can easily provide valuable context to downtime events, such as what product was running, who the operators were, what quality issues occurred at the same time, and much more. By contrast, a “point” solution that only provides downtime tracking natively lacks this contextualization. Additionally, with an MES, operators can view real-time scoreboards showing availability and performance. Based on all this information, meaningful actions such as changes to the way a problematic product is produced or additional training can be implemented.

Structuring Downtime Tracking Systems for Success from the Start

Accurate data starts with clear definitions. When we help clients implement downtime tracking, we begin by interviewing stakeholders across operations, maintenance, and management. Each group has unique needs:

- Operators need upstream and downstream context to respond quickly to faults

- Maintenance teams need event history to identify recurring equipment issues

- Management needs visibility into patterns tied to specific shifts or products

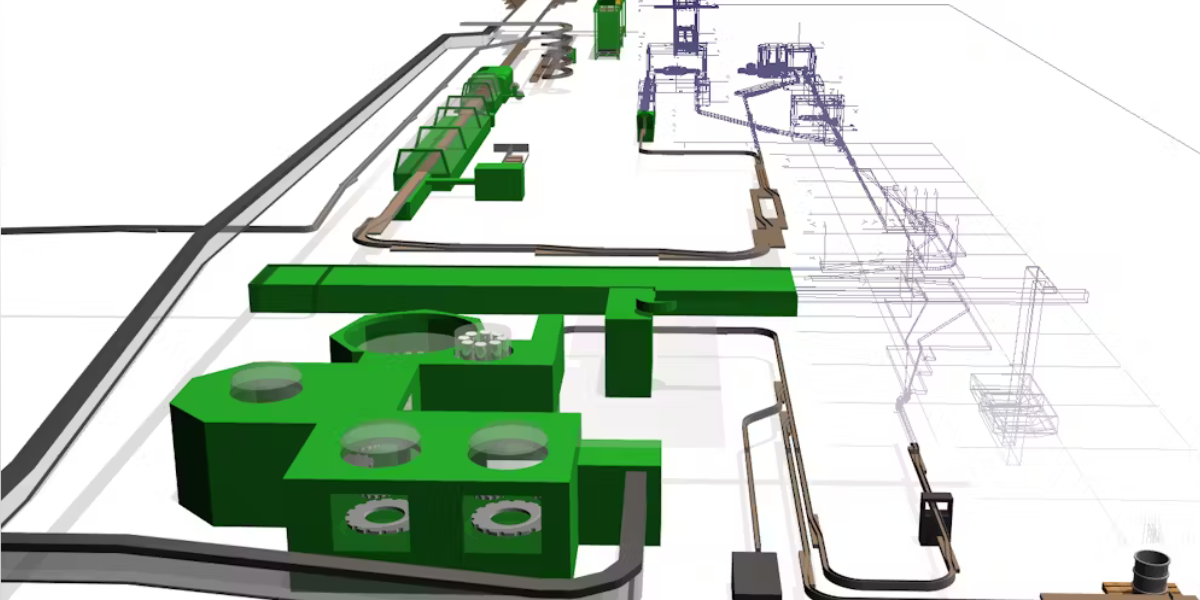

By structuring MES applications to meet the needs of all stakeholders, we can help ensure the right data is captured and available at every level. We can also deploy multi-plant solutions across a manufacturer’s entire enterprise to provide a more comprehensive understanding and the ability to compare line and plant performance across all assets.

The Future of Downtime Data

Today, most downtime data is historized, providing a valuable record of past events. As artificial intelligence (AI) is incorporated into more applications on the plant floor, the next evolution of analytics will use AI to analyze data streams, find correlations, and predict issues before they occur. By combining AI with digital twins and digital threads, manufacturers will be able to model real-time production behavior and link it with contextual MES data to forecast performance and identify potential bottlenecks before they happen.

AI tools such as Parsec’s soon-to-be-released TrakSYS IQ (AI assistant) will allow natural language queries on downtime data to better understand downtime losses, the impact of each loss, and what action(s) should be taken to remedy the problem(s). Future releases are expected to automatically identify these correlations and recommended actions.

Overall, collecting quality data is a key requirement for delivering actionable and AI-driven insights. But the question remains: How accurate is your downtime data? As you consider the questions discussed in this post, the answers may reveal more about your plant’s true performance than you realize. Improving the accuracy of your downtime data could be a fairly straightforward path to helping you achieve greater operational efficiency.